Problems

In reality, there are three subproblems to this:

- Predicting the instruction type: is this instruction a branch (i.e., can it cause non-sequential control transfer)?

- Yes: {B, BR, B.cond, BL, BLR, RET}; no: everything else in ISA

- Predicting the branch outcome: If the instruction is a branch, will it be taken or not?

- Unconditionally taken: {B, BR, BL, BLR, RET}; conditionally taken: {B.cond}

- Predicting the branch target: If the branch is taken, to which non-sequential address will it transfer control?

- Statically known: {B, BL, B.cond}; dynamic: {BR, BLR, RET}

We need to make relevant predictions based solely on PC value and prior knowledge.

What we want to do is gather information from what happened before.

Predicting Instruction Type

Observation: the type of the instruction at a program counter (PC) is static

Implication: maintain a lookup table (the Instruction Status Table, or IST) to record and look up this information.

There are some considerations of this: size of the table, taking advantage of instruction alignment, keeping additional information in IST, aliasing between instructions.

Predicting the Branch Outcome

Imagine we have C code like the following:

void foo() {

for(int i = 0; i < 5; i++) {

...

}

}Simplified assembly can be described as:

.foo:

mov r0, 0

.loop:

cmp r0, 5

beq .exit

add r0, r0, 1

bne .loop

.exit:

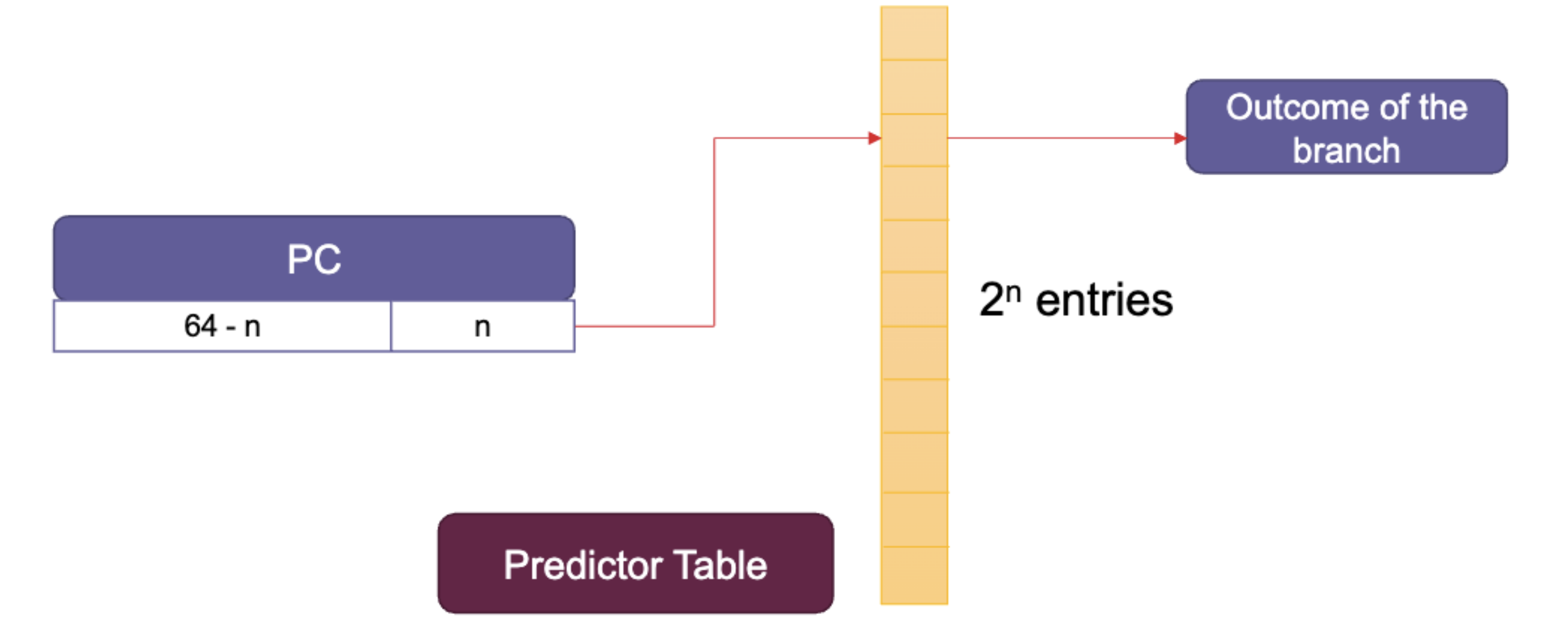

NOPThe loop is the critical path and most of the time will be predicted not taken. We will use a simple bimodal predictor.

If we use a single-bit predictor, we remember the last outcome, and the current prediction becomes the last outcome.

The misprediction rate is for the first call to foo and for the second and onwards.

Improving Accuracy

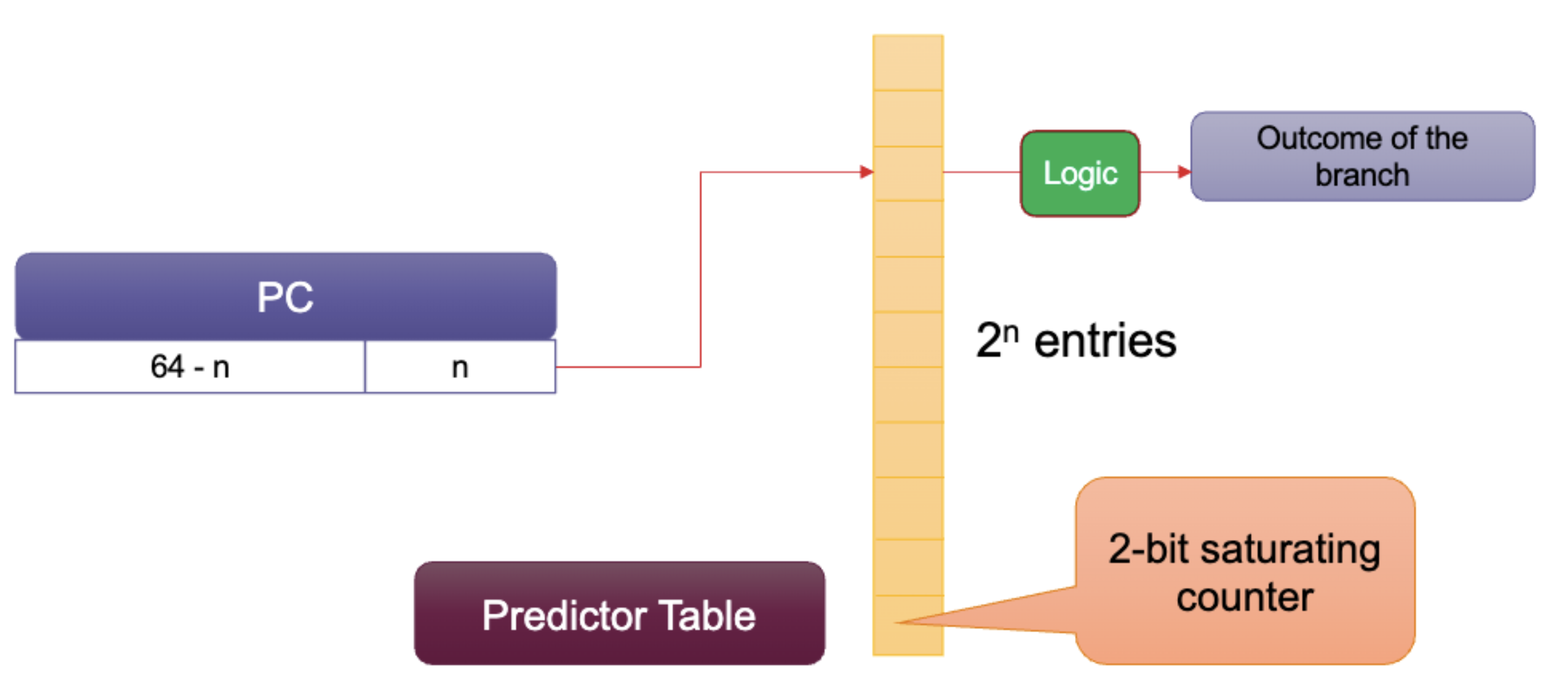

We can always add extra bits to the table.

The beq instruction in foo is fundamentally biased towards not taken

- One exception at loop exit shouldn’t impact this tendency significantly

- Add hysteresis

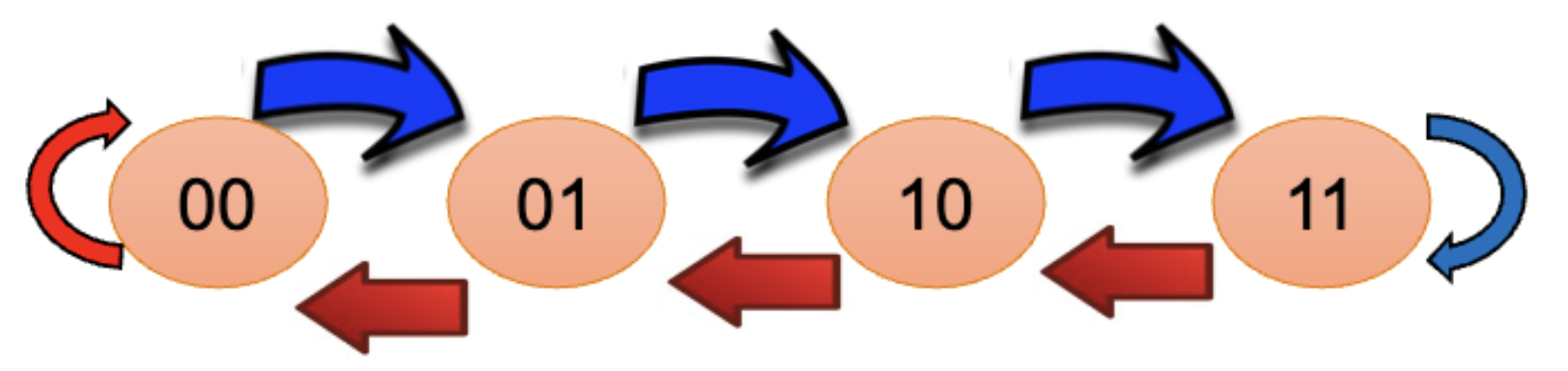

Use a saturating counter, increment when taken, decrement when not taken.

We can predict not taken when we have 00 or 01, and predict taken when we have 10 or 11.

This is a Moore finite state machine. We start from 01 and the misprediction rate eventually decreases to .

Global History

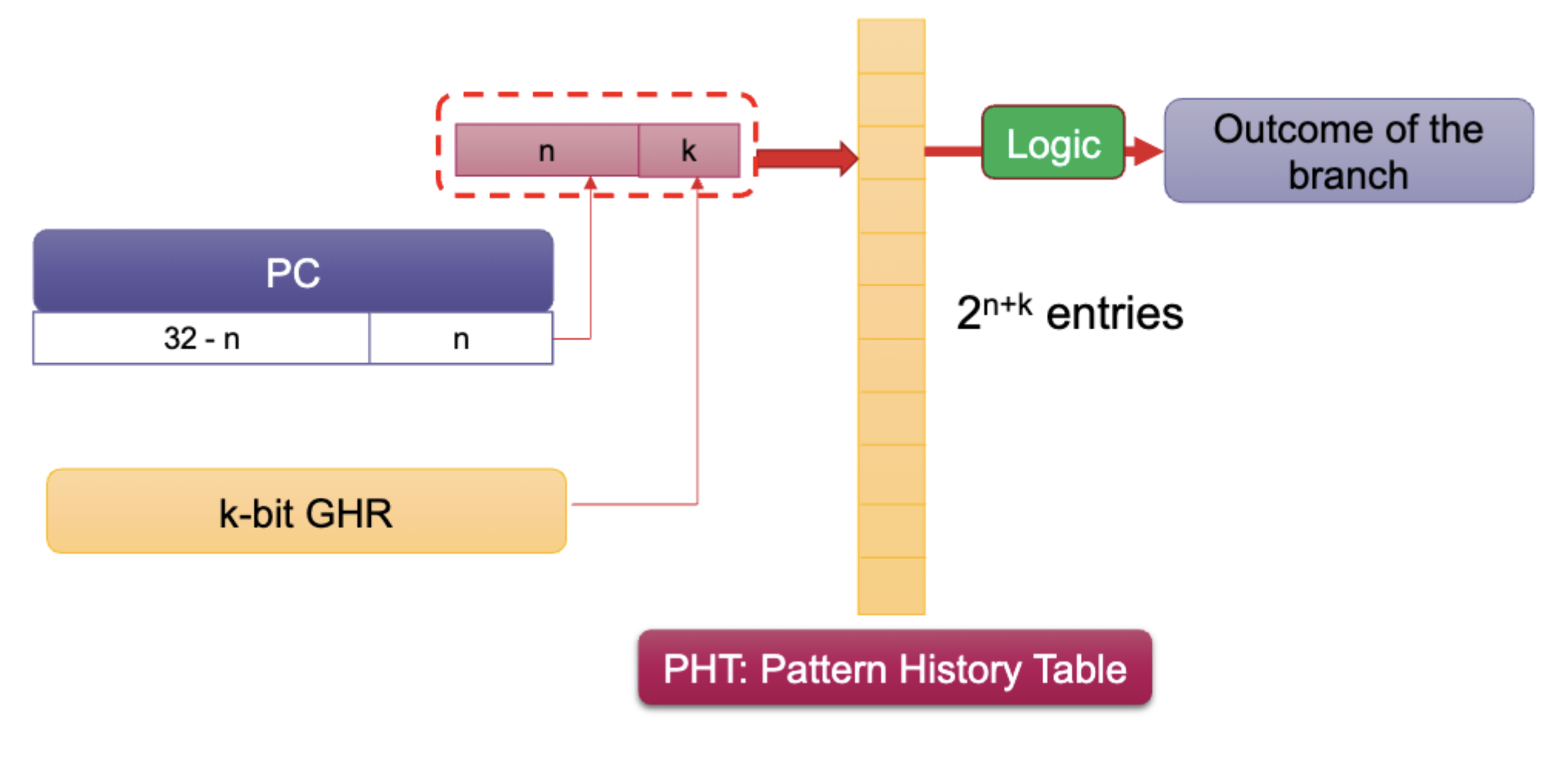

We need to have a shift register that records the history of the last branches encountered by the processor.

We have one bit for each branch, regardless of the PC. We record 1 for branch taken and 0 for branch not taken.

Consider a 2-bit global history register (GHR) like this:

We have two conditional branches in the running example:

We have two conditional branches in the running example: beq .exit and bne .inc

Use both bits of the GHR and least-significant bits of the PC to index into the pattern history table (PHT). The PHT has entries and increased accuracy.

This is also called a GAp two-level adaptive predictor: G for Global, A for Adaptive, p for per-branch/pc.

Invented by UT professor Yale Patt! Yeh & Patt 1991.

Bits used by different configurations

GAp:

GAg:

PAg:

Leveraging Local and Global Information

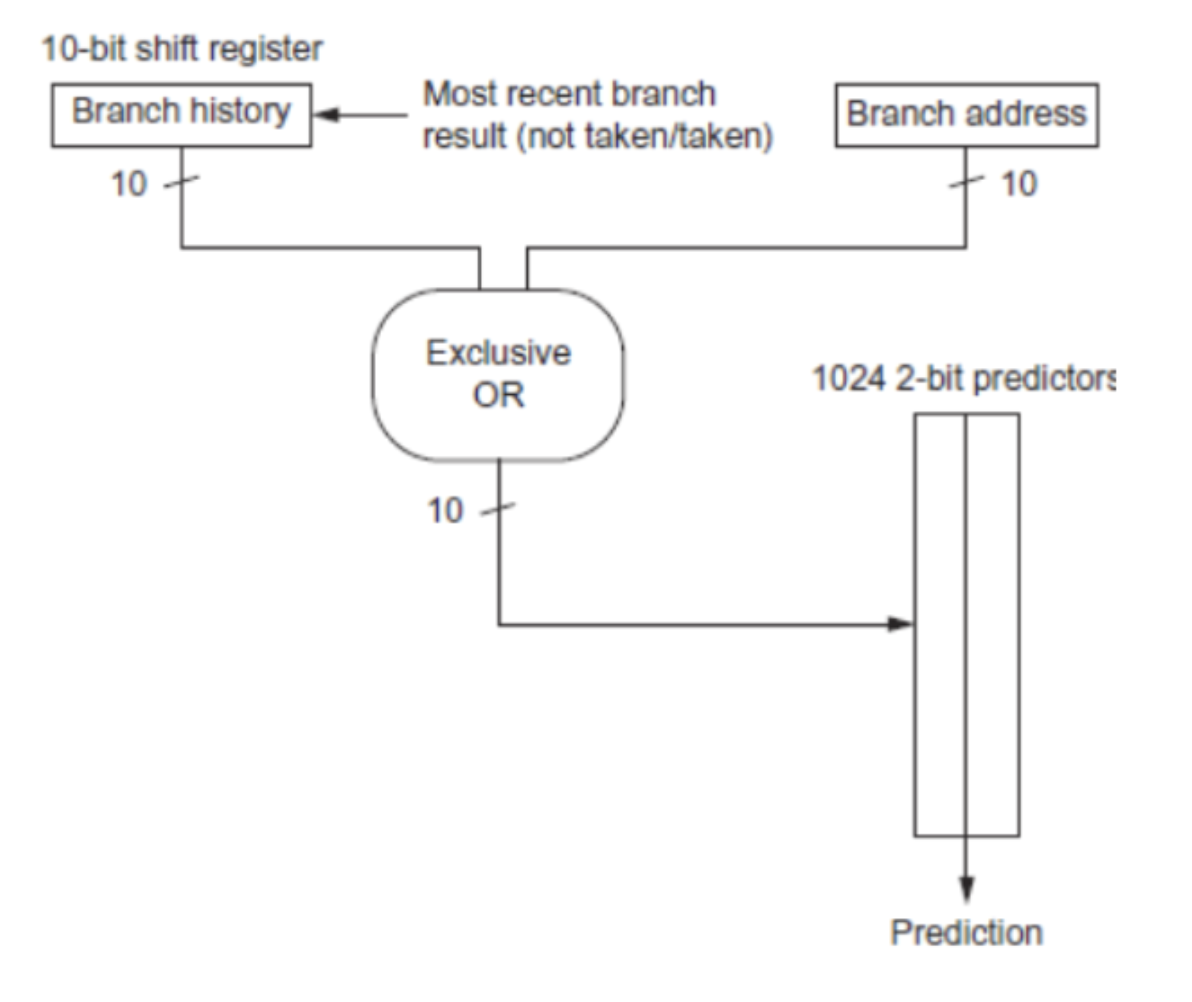

The previous predictors used a ton of data and state to be able to predict branches. We can, however, just use a simple XOR with shared indexes into a prediction table. Eventually, a bunch of addresses and behaviors will converge into a behavior and be placed at a certain index in the table which will then be trained onto local history. Called the “gshare” predictor.

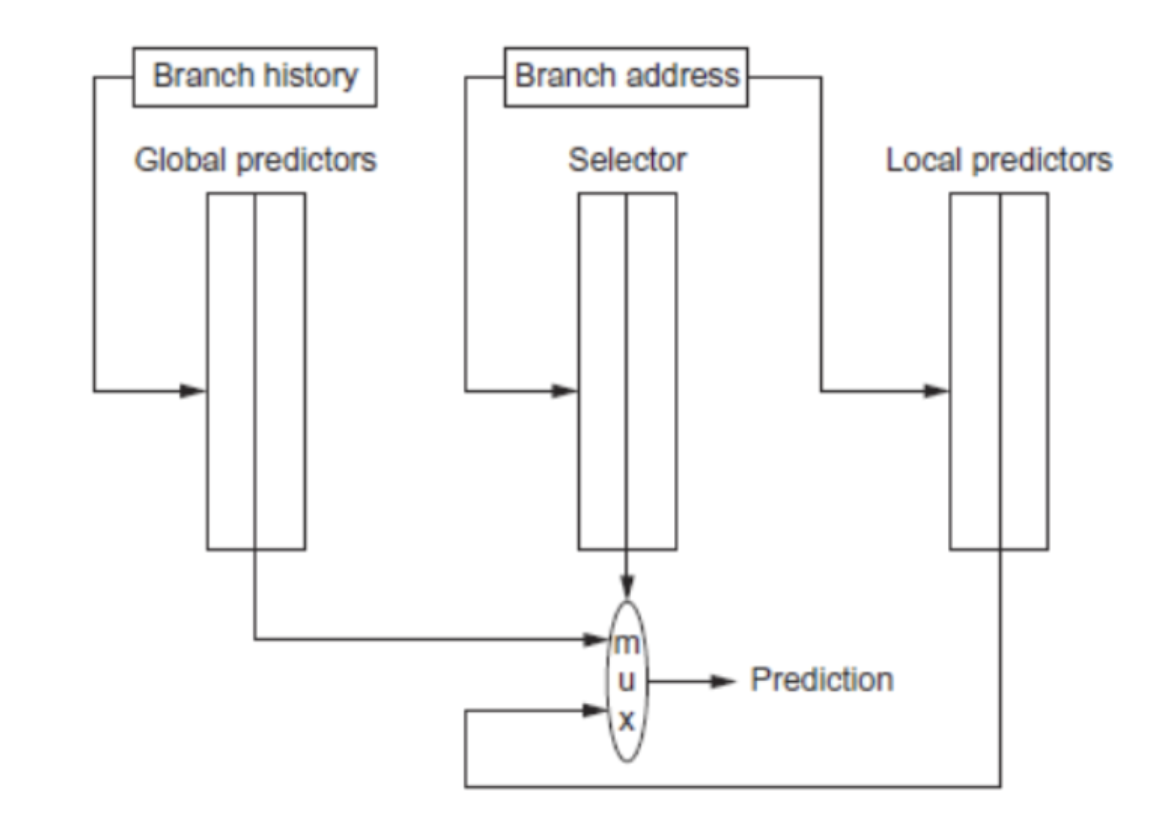

There are also combining or “tournament” predictors used in Alpha 21264.

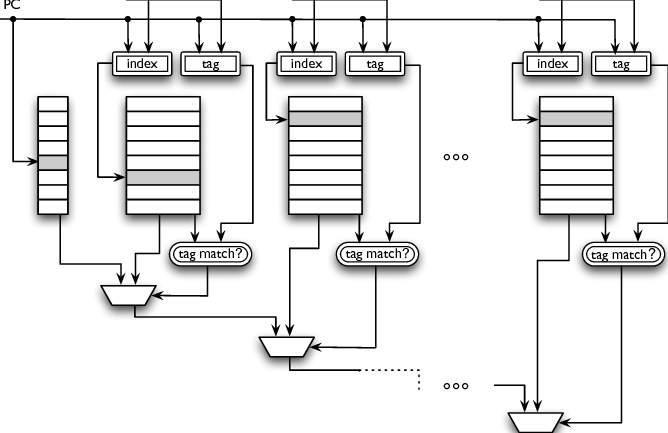

Tagged Hybrid Predictors

Stands for TAgged GEometric predictor (TAGE). It keeps multiple predictions with different history lengths: . The hash function can once again just be an XOR. It partially tags predictions to avoid false matches and only provides a prediction on match.

Neural Branch Predictor

Invented by UT Student and Professor Calvin Lin! Jiménez & Lin 2002.

The algorithm is to hash the branch address to produce an index into the table of perceptrons. Fetch perceptron from the table into a vector register of weights . Compute as the dot product of and the global branch history register (BHR). BHR is encoded as 1 for a branch that is taken and -1 for a branch that is not taken. Predict branch as not taken of and taken otherwise. Once the branch is resolved, use the training algorithm to update the weights in . Write back the updated into entry of the table of perceptrons.

The dot product is the slowest/hardest operation so we need to optimize it. To approximate the dot product, we will make the weights small-width integers because we don’t need insane accuracy. Since BHR entries are conceptually , we just need to add to if it is 1 and subtract if -1. For subtraction we just use . Since we only need the sign bit to predict, the other bits can be computed slower.

The training algorithm takes in 3 inputs: where is the branch outcome and is a training threshold parameter (hyperparameter). If then forall . Empirically, the best threshold for a given history length is .

Predicting the Branch Target

Where is the branch target calculated?

- For B, BL, and B.cond, in late Fetch or early Decode stages

- For BLR or RET, in late Decode

This is too late to fetch the correct branch target instruction in the next cycle, even if the prediction is correct, so we need a way of storing the data so we can access it quickly.

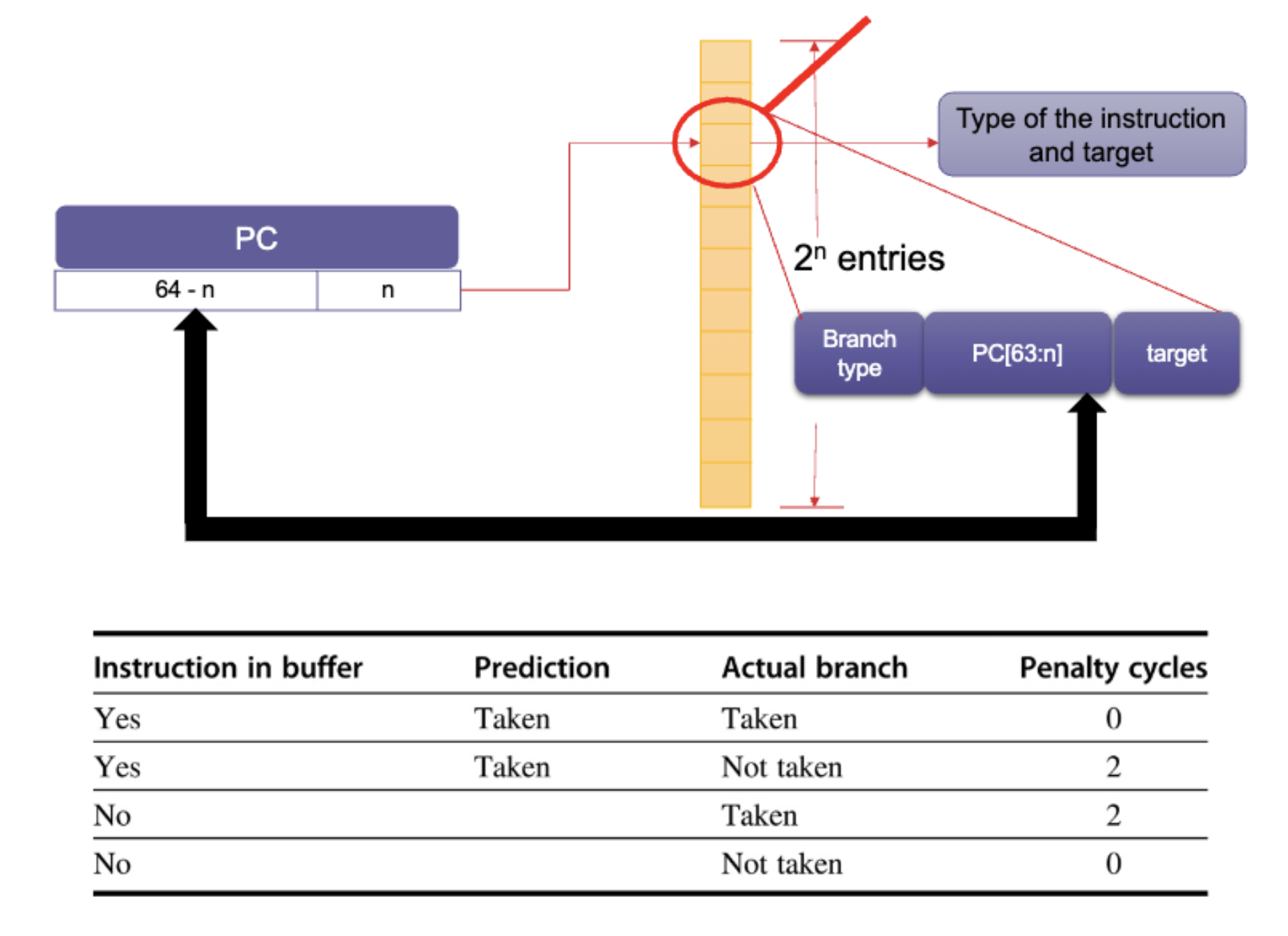

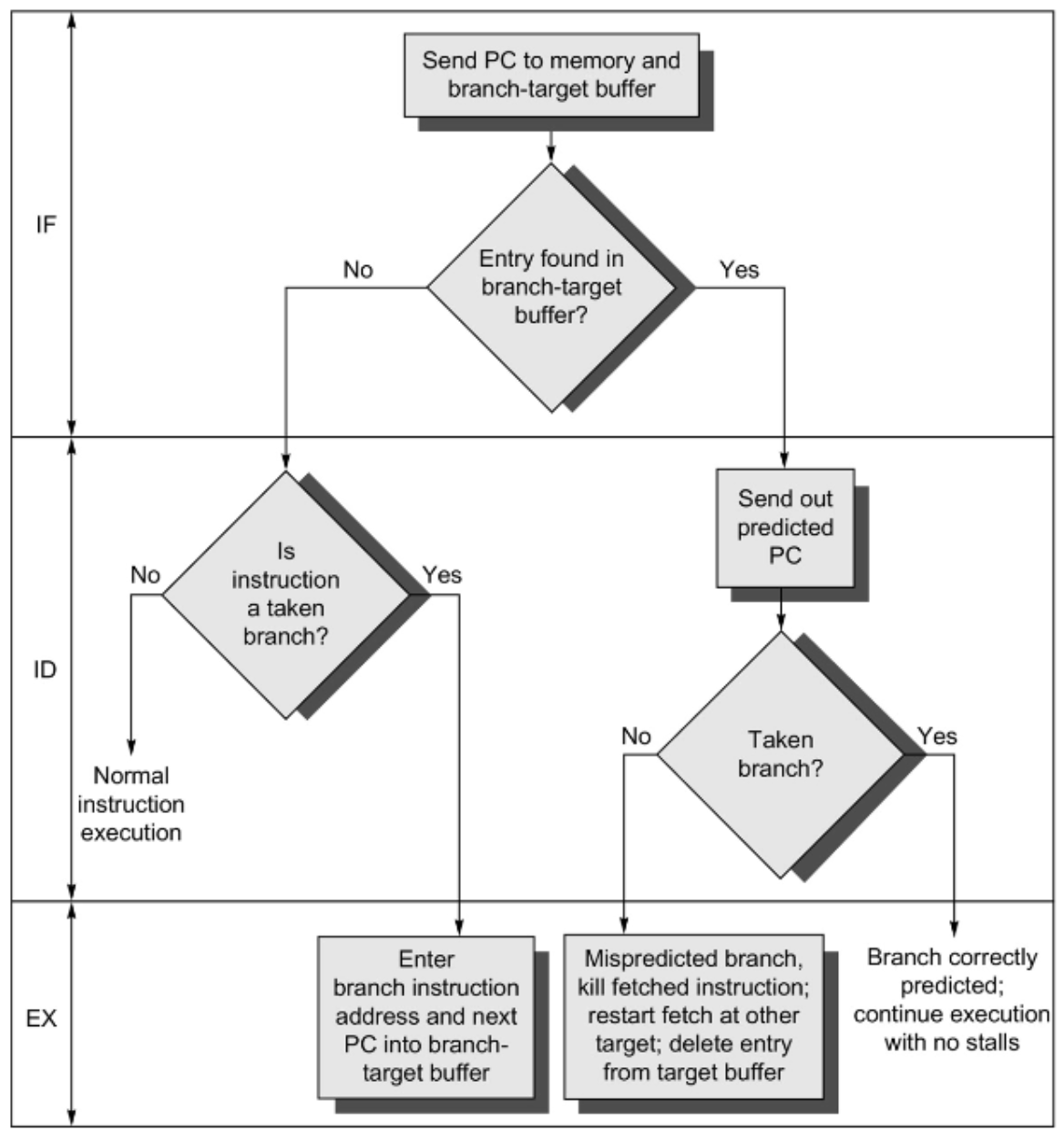

We extend the instruction status table (IST) to keep this information, we call it the Branch Target Buffer (BTB).

The variation is to keep target instructions in the BTB along with (or instead of) the branch target address. This allows branch folding: zero-cycle penalty for unconditional branches (and sometimes for conditional branches as well).

Special Case: Predicting Return Addresses

Since normal call-return protocols are LIFO, use a stack of return addresses to predict branch targets of RET instructions with 100% accuracy (as long as the stack doesn’t overflow). This is called a Return Address Stack (RAS). Push return address when function is called and pop return address when RET is encountered. There are typically 8-16 entries in the stack.

An issue arises, though, how can we handle call depths deeper than this?

We just spill to memory.

A RET is effectively a BR. However, they tell the module whether or not this is a function return within the ISA itself depending on if you used RET or BR, which could be used to add additional details.